Conroe, Sandy Bridge, Ivy Bridge, Haswell, Skylake, and anything in between, we’ve overclocked them all. Each had their pros and cons, but the standout architecture in that list is Sandy Bridge. Good samples were capable of achieving stable overclocks of 5GHz on air cooling. It’s a landmark that has proven elusive, until now. Finally, we have a worthy successor: Kaby Lake. Intel’s latest processors make 5GHz overclocks possible with air cooling, and you even can go beyond that. No need for lengthy intros when excitement levels are at fever pitch. Let’s get down to business!

Frequency expectations – i7-7700K

Our R&D dept has tested hundreds of CPUs and found the following frequency ranges are workable for overclocking Kaby Lake i7-7700K CPUs:

- 20% of samples are stable with Handbrake/AVX workloads when running at 5GHz CPU core speeds.

- The AVX offset parameter can be used to clock 80% of CPU samples to 5GHz for light workloads, falling back to 4.8GHz for applications that use AVX code.

- The ASUS Thermal Control Tool has now been ported into UEFI and can be used to configure profiles for light and heavy (non-AVX) workloads to extend CPU core overclocking margins on air and water cooling by up to 300MHz.

- Memory frequency: The best CPU samples can achieve speeds of DDR4-4133 with four DIMMs (ROG Maximus IX series of motherboards needed). DDR4-4266 is possible on the Maximus IX Apex. For mainstream use, we recommend opting for a memory kit rated no faster than DDR4-3600, as all CPUs are capable of achieving such speeds.

CPU power consumption and cooling requirements

One of the questions that always arises when we’re dealing with overclocking is “how much Vcore is safe?” Generally, we recommend constraining an overclock to stay below 2 X the stock power consumption of the processor under full load. To work out what that figure is, we can measure the CPU’s power draw via the EPS 12V power line using an oscilloscope and current probe.

To generate workloads, we tested with the brute-force loads of Prime95’s small FFT tests (AVX2 version) and also with ROG Realbench, which uses real-world rendering and encoding tests. Using both “synthetic” and real-world tests allows us to establish voltage recommendations for both scenarios.

Don’t fret if you can’t understand the captures, we’ve performed the calculations for you and show the figure in Watts beneath each image.

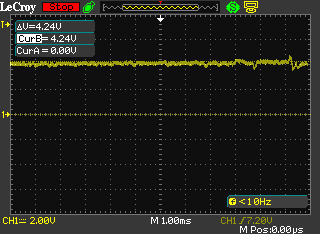

Stock frequency, ROG Realbench load current = ~45W

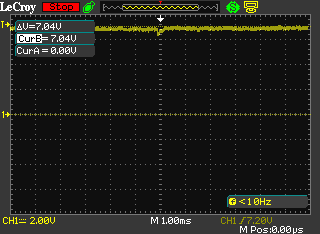

Stock frequency, Prime95 load current =~76W

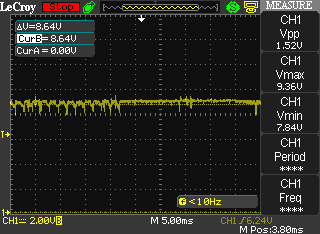

5GHz CPU frequency, ROG Realbench load current = ~93W

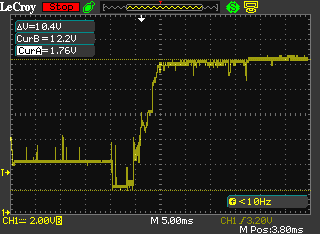

5GHz CPU frequency, Prime 95 load current=~131W

With the data acquired, we can identify where our “self-imposed” limits lie. We will add our obligatory disclaimer at this point and state that overclocking has risks and voids warranty unless you opt for Intel’s Performance Tuning Protection Plan. Keep that in mind, as there’s no way for us to guarantee things won’t go awry if you overclock a CPU. All risks are your own. What follows is nothing more than a set of guidelines based upon our own experience.

In order run Prime95 at 5GHz, our CPU sample requires 1.35Vcore. Power consumption under that load comes in at 131 Watts, which is comfortably below 2X the stock power consumption of Prime95. I must confess, CPUs are usually power rated by application power rather than Prime95, hence leaving some headroom below the 2X figure is prudent.

So, if you’re running Prime95 as a short-term stress test, we advise using no more than 1.35Vcore with a 7700K CPU. If the CPU has not been de-lidded/re-lidded for a thermal paste upgrade, you’re likely to run out of thermal headroom around that voltage anyway.

Now, if you happen to be the type of user that spends more time running Prime95 than using a PC for other tasks, then we advise you reduce the maximum Vcore. “By how much?”, you ask. Well, you’re on your own for that. Remember, it’s current that degrades or kills a CPU. Be mindful of how much load you’re placing on the chip long-term and act accordingly. There’s nothing worse than pushing insane levels of current through the die and then moaning when there’s degradation.

Realbench is far kinder to the silicon from a power consumption point of view. At 5GHz and 1.35 Vcore, the power drawn is only 93 Watts. The risk of degradation is far lower than when subjecting the CPU to the brutality of AVX-enabled versions of Prime95. In fact, you could push up to 1.40Vcore with Realbench, and still keep consumption below the power levels drawn by Prime95 at 1.35 Vcore, but that’s as far as we’d go for sustained exposure to such workloads.

Immediately apparent from this data is the fact that Kaby Lake is very power efficient. Sub-150-Watt power consumption when dealing with the nasty loads of Prime95 at 5GHz is incredible. Even if you were to find a gem Skylake CPU capable of operating at the same frequency, power levels would be up around the 200 Watt mark to obtain stability. The upshot is that Kaby Lake’s efficiency takes the focus off motherboard power delivery to a reasonable extent. Any motherboard costing more than $150 should be capable of overclocking these CPUs to the limits when using air or water cooling. That includes all the ASUS motherboards in our Z270 guide.

With the power side of things dealt with, we can take at whether the Kaby Lake architecture responds to lower temperatures when overclocking.

| CPU core frequency | Cooling | Vcore | Peak CPU temperature in Celsius | Realbench - 2 Hours Pass? |

|---|---|---|---|---|

| 5GHz | Dual radiator AIO (water temp 28C) | 1.28V | 70C | No |

| 5GHz | Triple radiator custom loop in temp controlled room. Water temp 18C | 1.28V | 63C | Yes |

| 5GHz | Noctua NH-D15 | 1.28V | 73C | No |

| 5GHz | Dual radiator AIO | 1.32V | 72C | Yes |

| 5GHz | Air - Noctua NH-D15 | 1.32V | 78C | Yes |

From an overclocking perspective, the only advantage a dual radiator AIO cooler has over the Noctua NH-D15 heatsink is lower CPU temperatures. Unfortunately for us, the temperature drop isn’t significant enough to reduce Vcore requirements. To obtain a Vcore advantage, our CPU sample requires its load temps are kept under 65 Celsius, which requires a water chiller holding the coolant temps to 18 Celsius, or an ambient room temperature of 13~15 Celsius with a custom water loop and 3 X 120MM radiator. Not exactly a mainstream scenario. Of course, there is variance between samples, so our results may not be reflective of all CPUs.

De-lidding the CPU’s IHS (integrated heat spreader), replacing the thermal paste with something more thermally conductive, and then re-lidding, can yield benefits. We’ve seen temperature drops between 13~25 Celsius when the procedure is performed correctly.

If you’re wondering why Intel uses paste that’s less thermally efficient than the exotic mixes available to consumers, consider all the thermal cycling a CPU is subjected to over a few years. Heat can cause thermal pastes to fracture, creep, or pump out over time, leading to hot-spots on the die. Intel’s choices are likely based on long-term evaluations and ease of mass-application on the production line. With that in mind, if you do happen to embark on the de-lidding journey, it’s probably wise to re-apply the paste periodically, especially if you’re using the CPU in a vertically installed motherboard.

The process of de-lidding is made easy due to the availability of tools such as the Delid-Die-Mate 2, designed by Roman Hartung (AKA Der8auer), and the Rockit 88 de-lidding tool. Both are simple to use, so choose whichever is available to you. The old fashioned method of using a razor blade to cut through the sealant is riddled with potential for failure, as it’s easy to damage the PCB, resulting in a partially-working or dead CPU – something we have experienced first hand.

The Delid Die Mate 2 – makes de-lidding easy

For our de-lidding expedition, we used Thermal Grizzly Conductonaut between the IHS and CPU, and also between the IHS and water block. Conductonaut is a liquid metal compound with excellent thermal conductivity – better than any other compound we’ve used to date. The application resulted in a 13~15 Celsius drop in core temperatures, leading to improved overclocking stability at lower voltages:

| CPU core frequency | Cooling | Vcore | Peak CPU temperature in Celsius | Realbench - 2 Hours Pass? |

|---|---|---|---|---|

| 5GHz | Dual Radiator AIO (water temp 28C) | 1.28V | 55C | Yes |

| 5GHz | Noctua NH-D15 | 1.28V | 62C | Yes |

Previously, the dual-radiator AIO setup wasn’t stable at 5GHz with anything less than 1.328V. The re-pasting adventure provided temp drops that are substantial enough to reduce required Vcore to 1.28V, which is in line with the previous result with water temps at 18 Celsius. The additional headroom also allows us to push the CPU 100MHz higher with a mere 16mv voltage hike (1.344 Vcore). Prior to de-lidding, the CPU required 1.38Vcore for the same frequency. The gains are real. Just bear in mind that it will void Intel’s warranty. Interestingly, we have heard that some retailers are selling re-pasted CPUs and providing their own warranty (Case King and OCUK). It may be a worthwhile option if fiddly DIY doesn’t sit well with you.

Memory frequency expectations

From everything we have seen to date, Kaby Lake’s overclocking prowess also applies to the memory side of the bus. Speeds of up to DDR-4133 are possible with four memory modules on our ROG Maximus series boards, while the Maximus IX Apex and Strix Z270I Gaming models manage DDR4-4266 thank to their optimized two-slot design.

As always, we’re going to recommend being conservative and opting for memory kits rated below DDR4-3600 if you favour plug-and-play. Above those speeds, manual tuning can be required due to variance between CPUs and other aspects of the system. Leave faster kits to enthusiasts who like to spend their time tweaking the system.

Also of merit for plug-and-play aspirants: be sure not to combine memory kits. Purchase a single kit rated at the memory frequency and density you wish to run. When combining memory kits – even of the same make and model – there’s no guarantee that the kits will run at the rated timings and frequency of a single kit. In cases where such configurations do not work, a lot of manual tuning is required, which isn’t for those of us who are light on experience and short on time.

For the enthusiasts out there, right now, all the latest high-speed memory kits are using the famous Samsung B-die ICs. It’s good stuff. Should temptation get the better of you and you’ve got your sights set on a DDR4-4000+ kit, be sure to provide adequate airflow over the memory modules. Good B-die based modules are sensitive to temperature, which can affect their stability. A capable memory cooler pays dividends if you intend to push the memory hard.

GSkill’s DDR4-4266 TridentZ memory kit – 16GB of Samsung B-Die goodness….

Outside that, be mindful that some CPUs don’t like running high memory speeds when nearing the upper-end of their overclocking potential. In such cases, reducing the memory frequency by a ratio or two can help stabilize CPU core frequency without requiring additional Vcore.

Also noteworthy is that the highest working memory ratio is DDR4-4133. Higher speeds require adjustment of BCLK with the DDR4-4133 (or lower) ratio selected. If you do purchase a memory kit rated faster than DDR4-4133, don’t be alarmed if you see BCLK being changed to a higher value when you select XMP. The change is mandatory.

Uncore frequency

Uncore frequency – AKA Cache frequency in the ASUS firmware – can also be overclocked. The actual gains from overclocking the Uncore are application dependent, as it affects L3 cache access times. Generally, we prefer to focus on CPU frequency first, followed by memory frequency, and then we experiment with Uncore frequency.

Voltage wise, the Uncore shares the CPU Vcore rail. As a result, when we increase Vcore, we’re also increasing the Uncore voltage. That’s why there is some sense in overclocking the Uncore; you might as well claim the overclocking margin there is on the table, as you’ll be increasing voltage to the Uncore domain when you overclock the CPU.

From a frequency perspective, good CPU samples are capable of keeping Uncore frequency within 300MHz of the CPU core frequency when the processor is overclocked to the limits using air and water cooling. However, there may be a trade-off for the Uncore versus memory frequency on some CPU samples. If pushing memory frequency beyond DDR4-3800, it may be difficult to obtain stability with a Uncore frequency over 4.7GHz when the CPU cores are overclocked past 5GHz.

Typically, when Vcore is at 1.35V, Uncore frequencies between 4.7~4.9GHz are possible depending upon the quality of the CPU sample. As mentioned in the previous paragraph, CPU core and memory frequency affect how far the Uncore will overclock at a given level of voltage.

AVX offset

The AVX offset parameter allows us to define a separate CPU core multiplier ratio for applications that use AVX code. When AVX code is detected, the CPU Core multiplier ratio will downclock by the user-defined value. This feature is useful because AVX workloads generate more heat within the processor and they require more core voltage to be stable. Ordinarily, an overclock would be constrained by the hottest, most stressful application we run on the system. By using AVX offset, we can run non-AVX workloads, which don’t consume as much power, at a higher CPU frequency than AVX applications.

As an example, that means we can apply 50X ratio with a BCLK of 100MHz, resulting in a 5GHz overclock for non-AVX applications. We can then set the AVX offset parameter to a value 2 (or lower if required), which will reduce the CPU core ratio to 48X (4.8GHz) when an AVX workload is detected. This ensures the system stays within its thermal envelope, without our overclock being constrained solely by the AVX workload.

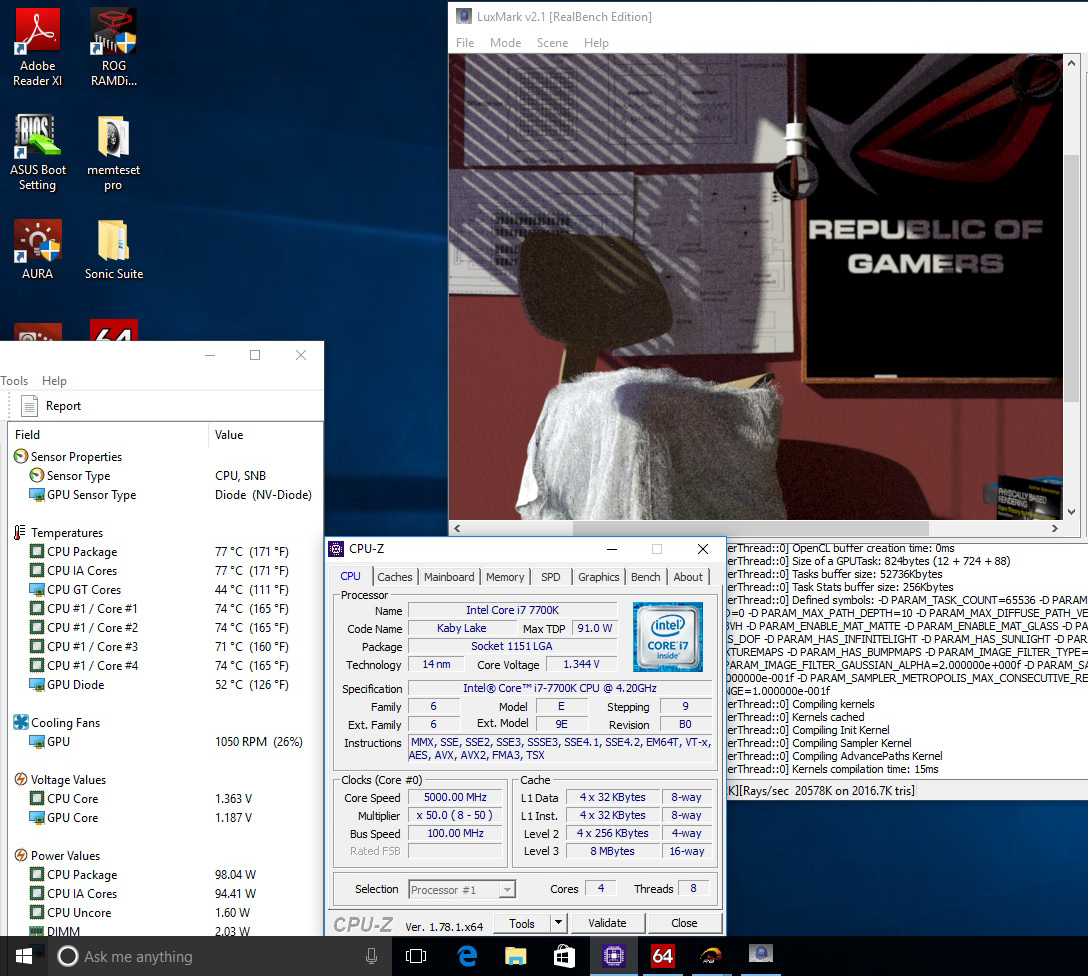

5GHz overclock maintained during non-AVX workloads

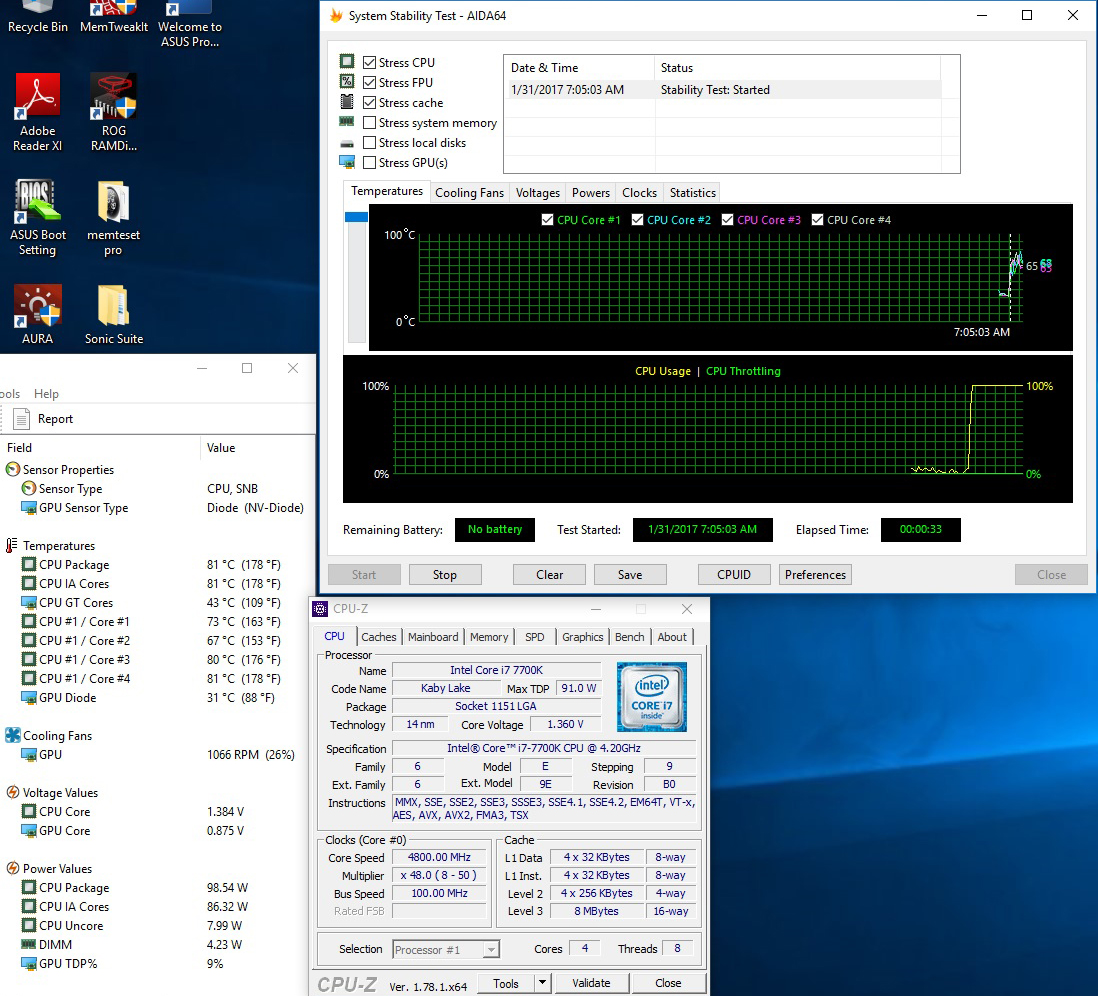

And downclocked to 4.8GHz during AVX workloads (AVX offset = 2)

For the most part, AVX offset works well in practice. The only caveat we have experienced is that we cannot apply a separate Vcore for the AVX ratio. Being able to define the amount of Vcore applied would help us to tune each CPU sample to the optimal voltage for AVX workloads. Crude workarounds for this limitation include using Offset Mode for Vcore to reduce the voltage, or by manipulating load-line calibration, which affects how much the voltage (Vcore) sags under load. Both of these workarounds have implications for stability, so cannot be employed in all cases. The option to manually set a core voltage for the AVX Offset ratio would be advantageous. We have already spoken to Intel about this and made suggestions for future iterations of AVX offset. The changes required are significant, so we likely won’t see them for a few generations; ergo, don’t expect them for Kaby Lake.

The other issue with AVX offset is that it only detects AVX workloads. There are applications and even some games that are multi-threaded, generating higher thermal loads than applications that aren’t as intensive. In such cases, overclocking headroom is more thermally constrained than it should be. Fortunately, there’s an exclusive ASUS workaround for the issue, and it’s called CPU overclocking temperature control…

ASUS CPU overclocking temperature control

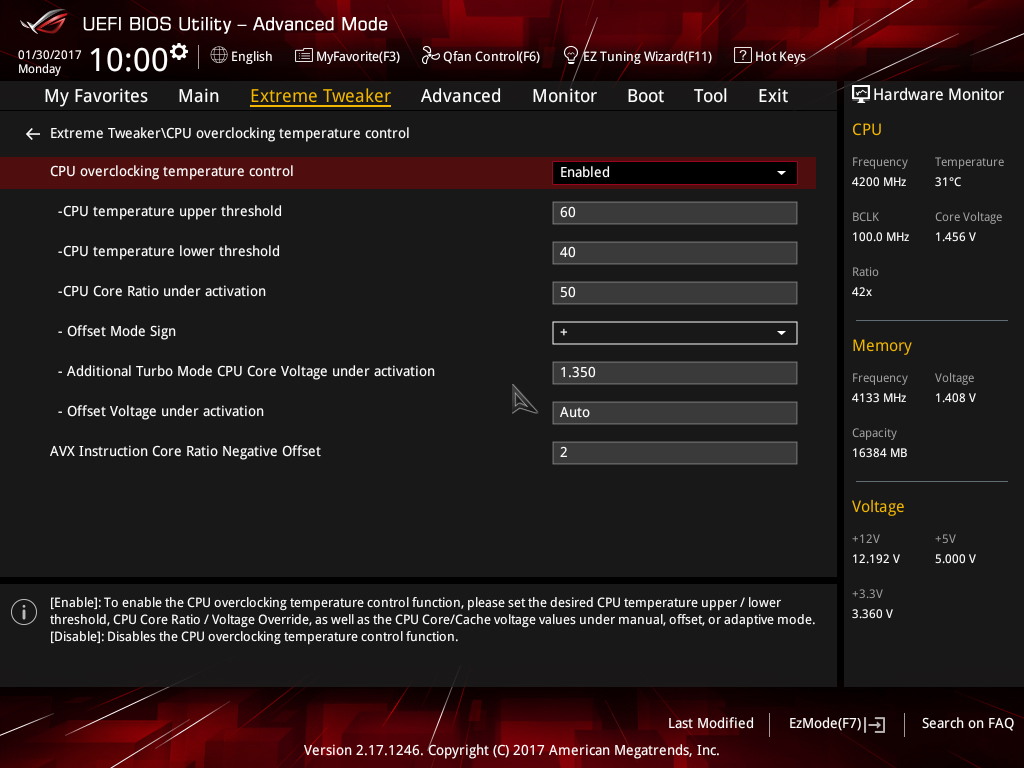

First unveiled on the X99 platform back in June 2016, the ASUS Thermal Control utility makes its way to the Z270 platform. This time, instead of being software based, it’s coded directly into the UEFI, and gets a name change to CPU overclocking temperature control:

The temperature control options allow us to configure two CPU core frequency targets directly from firmware; one for light-load applications, such as games, the other, for more stringent workloads. Both overclock targets can be assigned separate voltage levels and temperature targets so that we can run games and light-load applications at higher frequencies than workloads that generate more heat. The feature works by monitoring temperature and then applying user-configured voltages and multiplier ratios when the defined thermal thresholds are breached.

Unlike AVX offset, the temperature control mechanism isn’t limited solely to AVX workloads. Any application that generates sufficient heat to breach the user-defined temperature threshold will result in the operating frequency and voltage being lowered to the user-applied values. In essence, this provides more flexibility than the AVX offset parameter and deals with some of its drawbacks.

Depending upon the cooling used, an extra 100~300MHz of overclocking headroom can be cajoled from a CPU with the ASUS temperature control features. It’s a handy tool for enthusiasts who want to wring out every MHz of headroom from the system.